In a nondescript office park outside Vancouver with views of snow capped mountains in the distance is a mirrored business park where very special work is being done. The company is

D-Wave,

the quantum computing company. D-Wave's mission is to build a computer which will solve humanity's grandest challenges.

D-Wave aims to develop the first quantum computer in the world, perhaps they already have. The advent of quantum computers would be a sea change in the world that would allow for breaking of cryptography, better artificial intelligence, and exponential increases in computing speed for certain applications. The idea for quantum computers has been bubbling since Richard Feynman first proposed that the best way to simulate quantum phenomena would be with quantum systems themselves, but it has been exceedingly difficult to engineer a computer than can manipulate the possibilities of quantum information processing. Hardly a decade ago D-Wave began with a misstep which is the origin of their name. D-Wave got its name from their first idea which would have used yttrium barium copper oxide (YBCO) which is a charcoal looking material with a superconducting temperature above that of the boiling point of liquid nitrogen. This means that YBCO is the standard science lab demonstration of superconducting magnetic levitation. Ultimately the crystalline structure of YBCO was found to be an imperfect material, but the cloverleaf d-wave atomic orbital that lends YBCO its superconducting properties stuck as D-Wave's name. The vision of D-Wave did not change, but their approach did. They realized they would have to engineer and build the majority of the technology necessary to create a quantum computer themselves. They even built built their own superconducting electronics foundry to perform the electron beam lithography and metallic thin film evaporation processes necessary to create the qubit microchips at the heart of their machine.

I recently got to visit D-Wave, the factory of quantum dreams, for myself. The business park that D-Wave is in is so nondescript that we drove right by it at first. I was expecting lasers and other blinking lights, but instead our University of Washington rented van pulled into the wrong parking lot which we narrowly reversed out of. In the van were several other quantum aficionados, students, and professors, mostly from computer science who were curious at what a quantum computer actually looks like. I am going to cut the suspense and tell you now that a quantum computer looks like a really big black refrigerator or maybe a small room. The chip at the heart of the room is cooled to a few milikelvin, colder than interstellar space, and that is where superconducting circuits count electric quantum sheep. The tour began with us milling around a conference room and our guide, a young scientist and engineer, was holding in his hand a wafer which held hundreds of quantum processors. I took a picture and after I left that conference room they did not let me take any more pictures.

Entering the laboratory it suddenly dawned on me that this wasn't just a place for quantum dreams it was real and observable. The entire notion of a quantum computer was more tangible. A quantum computer is a machine which uses quantum properties like entanglement to perform computations on data.The biggest similarity between a quantum computer and a regular computer is that they both perform algorithms to manipulate data. The data, or bits, of a quantum computer are known as qubits. A qubit is not limited to the values of 0 or 1 as in a classical computer but can be in a superposition of these states simultaneously. Sometimes a quantum computer doesn't even give you the same answer to the exact same question. Weird. The best way to conceive of a quantum computing may be to imagine a computation where each possible output of the problem has either positive or negative probability amplitudes (a strange quantum idea there) and when the amplitudes for wrong answers cancel to zero and right answers are reinforced.

The power of quantum computers is nicely understood within the theoretical framework of

computational complexity theory. Say for example that I give you the number 4.60941636 × 10

18 and ask for the prime factors of this number. Now if someone were to give you the prime factors you could verify them as correct very quickly, but what if I asked you to generate the prime factors for me (I dare you. I have the answer. I challenge you. In actually this challenge is easy. Nine digit number aren't that hard to factor, a friend says they found a webpage that will do it. But the problem doesn't scale well to larger numbers). The quintessential problem here is the P versus NP question which asks whether if a problem can be verified quickly can it also be solved quickly. Quickly is defined as polynomial time meaning that the algorithm scales as the number of some inputs to some power. Computational complexity theory basically attempts to categorize different kinds of problems depending on how fast a solution can be found as the size of the problem grows. A P class problem is one in which the solution can be found within polynomial time. A NP class problem is one in which the solution can be verified in polynomial time. So if I ask you for the prime factors of my number above that is an NP problem because given the numbers you could verify the answer quickly, but it would be very difficult to calculate the numbers just given the number. It is an open question, but it appears likely that all P problems are a subset of NP. This means that problems verifiable in polynomial time are not necessarily solved in polynomial time. The issue is that for some very interesting problems in the real world we could verify the answer if we stumbled upon it, but we won't even be able stumble upon the answer in a time shorter than the age of the universe with current computers and algorithms. What we know we know and what we think we know is a sea of confusion, but the popular opinion and where people would take their wagers is that P is not equal to NP.

Suddenly, with mystique and spooky actions at a distance, quantum computing comes swooping in and claims to be able to solve some NP problems and all P problems very quickly. A general quantum computer would belong to the complexity class of BQP. There is a grand question at hand, is BQP in NP? (More generally, is BQP contained anywhere in the polynomial hierarchy? The polynomial hierarchy is a complexity class which generalizes P and NP problems to a particular kind of perfect abstract computer with the ability to solve decision problems in a single step. See this paper here on

BQP and the Polynomial Hierarchy by Scott Aaronson who is a outspoken critic of D-Wave) At this time we cannot even claim to have evidence that BQP is not part of NP, but most scientists close to the problem think that BQP is not a subset of NP. Quantum computing researchers are trying to get better evidence that quantum computers cannot solve NP-complete problems in polynomial time (if NP was a subset of BQP then the polynomial hierarchy collapses). A reasonable wager I would take is

that P is a (proper) subset of BQP and BQP is itself is a (proper) subset of NP. This claim has not been rigorously proved but it is suspected to be true and further there are some NP problems which it has been shown to be true for such as prime factorization and some combinatoric problems.

There might be an elephant in the room here. The D-Wave architecture is almost certainly attacking a NP complete problem and reasonable logic says that quantum computers will solve P problems and some NP problems, but not NP complete problems (this is also not proven, but suspected). An NP complete problem is a problem in which the time it takes to compute the answer may reach into millions or billions of years even for moderately large versions of the problem. Thus we don't know if this particular quantum computer D-Wave has built even allows us to do anything efficiently we couldn't already do on a classical computer efficiently; it doesn't seem to be a BQP class computer thus it cannot for example solve prime factorization cryptography problems. So, yes it is a quantum machine, but we don't have any evidence it is an interesting machine. At the same time we don't have any evidence it is an uninteresting machine. It is not general purpose enough to be clear it is a big deal, nor is it so trivial it is totally uninteresting.

The D-Wave lab was bigger than I expected and it was at once more cluttered and more precise than I thought it would be. It turns out the entire process of quantum computing follows this trend. There are a lot of factors they contend with and on the tour I saw people dead focused with their eyes on a microscope executing precise wiring, coders working in pairs, theoreticians gesturing at a chaotic white board, and even automated processes being carried on by computers with appropriately looking futuristic displays. The engineering problems D-Wave faces include circuit design, fabrication, cryogenics, magnetic shielding and so on. There is too much to discuss here so I will focus on what I think are scientifically the two most interesting parts of the D-Wave quantum computer which are the qubit physics and the quantum algorithm which they implement; in fact these two parts of their computer are deeply intertwined.

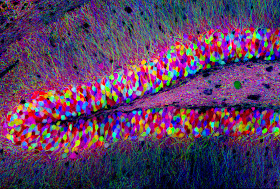

In the image above is a wafer of Rainer core superconducting microchips. The chips are built to exacting specifications and placed at the center of the D-Wave quantum computer in isolation from external noise such as magnetic fields and heat. In the quantum world heat is noise so the chips are kept at a temperature of a few milikelvin to preserve the quantum properties of the system. On each chip are 128 superconducting flux qubits. The qubit is the quantum of information with which this computer works. There are various ways to create a qubit such as quantum dots, photons, electrons, and so on, but D-Wave has gone with the flux qubit design for engineering concerns.

A flux qubit is a micrometer size loop of conducting material (in this case Niobium) wherein a current either circulates the loop clockwise or counterclockwise in a quantized manner such that the loop is either in a spin up (that is +1 or ↑) or a spin down (that is -1 or ↓) state. There is an energy potential barrier between the loop spontaneous flipping spin (or current circulation direction) which can be modulated through various control schemes. They control these loops using compound Josephson junctions and SQUIDs using their own propriety techniques, but borrowing heavily on decades of advancement in solid state physics.

Perhaps even more important than the qubit itself is the architecture and the algorithm implemented by the computer. They use a

quantum adiabatic algorithm based on the

Ising model. When I realized that their algorithm was based on the Ising model I couldn't help but marvel at the powerful simplicity. The Ising model is a statistical mechanics model of ferromagnetism where the atoms (vertices or variables) in a metal (crystal lattice or graph) are discrete variables with spin values that take on spin up or spin down values and each spin interacts with its nearest neighbors. It is a simple model that leads to beautiful complexity (for example see this article on the Ising model

here) especially when you allow the interaction of each spin with its neighbor to be finely controlled or when you allow the connectivity of the vertices to be varied. The Ising model is easily extended to more abstract problems. For example we can connect every single vertex to every other vertex, it wouldn't look like a crystalline structure any more, but it makes sense on paper or with wires on a chip.

The quantum adiabatic algorithm borrows ideas from physics such as the process of

annealing and spin states in the Ising model to solve a generalized optimization problem. During my tour of D-Wave we continued to talk about the algorithm and what was possible and the whole concept slowly crystallized for me, but it is not immediately obvious why they designed the computer they way they did because their implementation would not create a universal quantum computer. Why the quantum adiabatic algorithm?

- Quantum annealing is physically motivated method for a quantum computers which is not thwarted by thermodynamics or decoherence.

- Real world optimization problems can be modeled using the Ising spin glass. The hardware mirrors this.

- More complicated architectures will borrow from the quantum annealing approach such as a universal adiabatic quantum computer.

D-Wave has not created a general purpose quantum computer. They have created a quantum computer which solves the adiabatic quantum algorithm or equivalently an optimization problem. They use quantum annealing to solve the global minimum of a given objective function with the form of... Wait, wait, let me have a kitten tell you instead (math warning next to paragraphs):

Here E is the value to be minimized over the total system state s subject to the constraint of J

ij (where J

ij <1) acting between each element s

i and s

j (where all s

=+/-1). Each element s is weighted by the value h

i (where h

i >-1). The nearest neighbor spins of each ij pair is calculated according to the connections between vertices in a physics application or depending on the microchips graph architecture of actual physical connections on the D-Wave chip. ) The coupling between ij is determined by J

ij so this means that J represents your knowledge of how each component of the system interacts with its neighbors. Immediately we extend the above minimization parameterization to the physical implementation of quantum flux qubits.

In this new form the optimization problem is written as a Hamiltonian which determines the interaction and evolution of the system. The variables are modified, s

j →σ

z i and s

i →σ

z i where σ

i z are are Pauli matrices at site

i for a spin 1/2 qubit. Then h

i is the transverse field that represents transitions up and down between the two spin states ↑ and ↓, of each spin. Here K

ij is the weighting that defines the interaction between the qubits. The problem is to anneal the system as closely as possible to its classical ground state with the desired K

ij.

The D-Wave computer solves the the quantum adiabatic algorithm by initializing the spins of the flux qubits in their ground state with a simple Hamiltonian. Initially the potential well for the spin of qubits is U shaped; the ground state of the of the qubits when they are configured in this mode is a superposition of the |↑> and and |↓> flux basis. Then the qubits are adiabatically, or slowly, evolved to the specific Hamiltonian which encodes the optimization problem that is to be solved; the potential is evolved to the double-welled configuration at which point the ↑> and and |↓> states start to become the dominant basis. Actually, the final configuration is not exactly a double-welled symmetric state, but it has some relative energy difference between the to states which biases the machine towards the encoded problem. Evolving the Hamiltonian can be thought of as modifying the energy barrier between the spin up and down states for each flux qubit. In a real system each potential well has multiple energy levels possible in it besides the lowest energy state which is where the ideal calculation is performed. According to the adiabatic theorem the system remains in the ground state so that at the end the state of the system describes the solution to the problem. However, in a real machine noise, such as the ambient local heat, can still disturb the system out of the ground state. A key advantage to the D-Wave approach is robustness to noise in many situations. The slower the Hamiltonian is evolved, the more the process adheres to the ideal adiabatic theoretical calculation. Performing the calculation more slowly decreases the chance of jumping out of the ground state. Adding more qubits makes the energy gap at the tipping point smaller. Thus engineering is a machine with more qubits is hard. Interestingly, because quantum machines have statistical uncertainties each computation will have uncertainties which can be reduced by either running each calculation slower (and we are talking a few microseconds here) or by running the same calculation many times and seeing what different answers come up. As it turns out it is usually faster to run the calculation many times and compare answers than run one long calculation.

The theoretical minimization problem that is solved is best understood separately from what the actual quantum qubits are doing. Over at the D-Wave blog,

Hacking the Mulitiverse, they liken the optimization problem to finding the best setting for a bunch of light switches that have various weightings. Each light switch can be either on or off and can have an either positive or negative weighting, the h

i term above, and it can have a dependency on any other switch in the system determined by the J

ij term. It turns out to a be a really hard problem as for just 100 switches there would be 2

100 possible ways to arrange the switches.

Traditionally the first program a coder writes in a new language is a simple print statement which says Hello world. On a quantum computer the first program you write says Hello multiverse! You could write this program on a D-Wave. Yes, you really can because you can go out any buy one.

Lockheed Martin bought one earlier this year for ten million dollars. The detractors to D-Wave would say you are not getting a real quantum computer, but then why did Lockheed Martin buy one? It is legitimate to ask, is D-Wave if the first true quantum computer? This of course depends on your definition of a quantum computer. The answer is probably no if you want a universal quantum computer (which belonged to the BQP complexity class discussed earlier). Probably no here means that reasonable computer scientists studying quantum computers have excellent reason to believe the answer is no but they lack rigorous mathematical proof. On the other hand if you are looking for a computer which exploits quantum effects to implement a specific purpose quantum algorithm then I think you can safely say, yes, this is a quantum computer. I am just a naive astronomer though so don't take my word for it. So let me clarify and say that just because a computer exploits quantum mechanics does not make it a quantum computer. All microchips today are small enough that the designers know something about quantum mechanics, maybe they even have to account for it in the chip's design, but crucially the compilers and the code that is written for the machine has no knowledge of the quantum mechanics. The algorithms run on the machine assume nothing about quantum mechanics in our universe. However, a real quantum computer would obviously be programmed according to the rules of quantum mechanics. Indeed the the D-Wave computer is executing an algorithm which explicitly takes into account quantum mechanics. Further, whether or not the D-Wave computer is actually a quantum computer that will satisfy computer scientist's definition is a mute point compared to asking if it is useful. Currently D-Wave is running experiments that show that the speed scaling of their machine as a function of inputs is, hopefully, better than classical computers and algorithms. In the future they will have to show with double blind experiments that their machine scales better than classical machines. If they can execute calculations in a few microseconds which take classic computers decades I don't care if you call it the one true quantum computer or an oracle, I will just want one.

References

Harris, R., Johansson, J., Berkley, A., Johnson, M., Lanting, T., Han, S., Bunyk, P., Ladizinsky, E., Oh, T., Perminov, I., Tolkacheva, E., Uchaikin, S., Chapple, E., Enderud, C., Rich, C., Thom, M., Wang, J., Wilson, B., & Rose, G. (2010). Experimental demonstration of a robust and scalable flux qubit Physical Review B, 81 (13) DOI: 10.1103/PhysRevB.81.134510

Harris, R., Johnson, M., Han, S., Berkley, A., Johansson, J., Bunyk, P., Ladizinsky, E., Govorkov, S., Thom, M., Uchaikin, S., Bumble, B., Fung, A., Kaul, A., Kleinsasser, A., Amin, M., & Averin, D. (2008). Probing Noise in Flux Qubits via Macroscopic Resonant Tunneling Physical Review Letters, 101 (11) DOI: 10.1103/PhysRevLett.101.117003